Blackout Blob

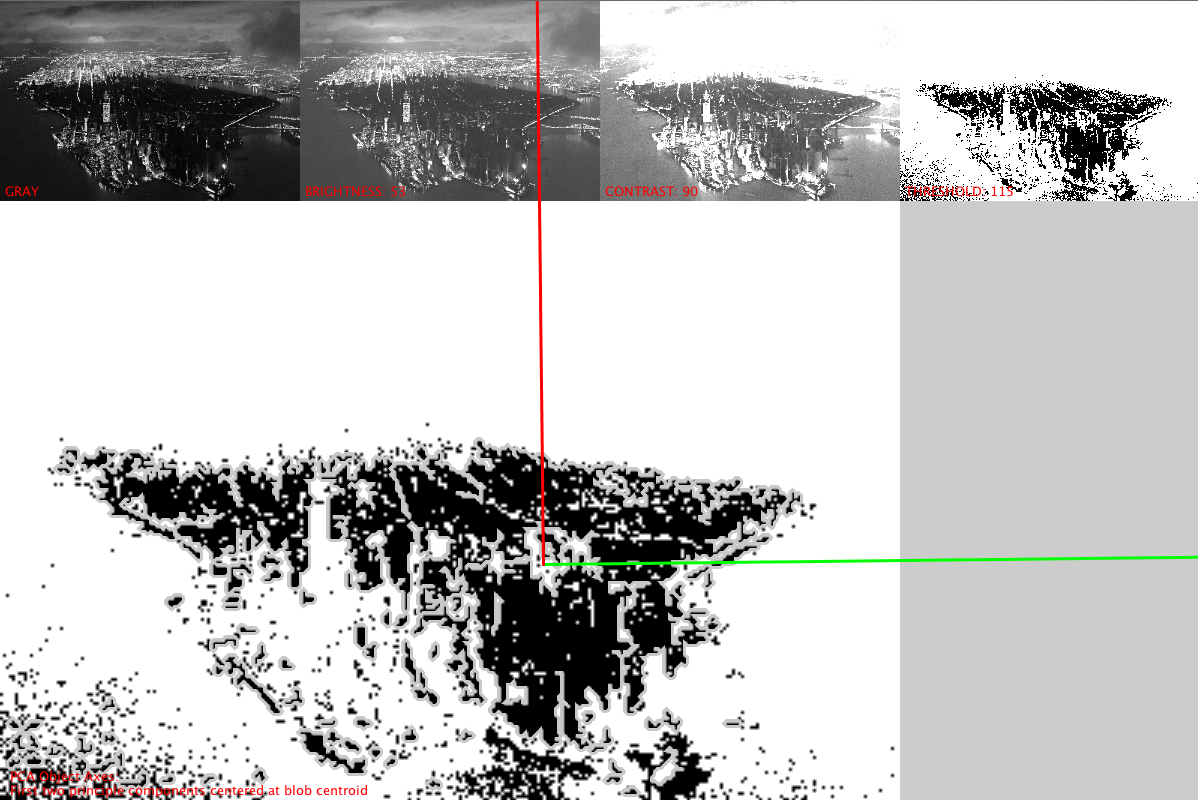

This week, New York magazine had a series of striking aerial photographs of the city’s blackout in the aftermath of Superstorm/Hurricane Sandy. Since the blackout covers such a large area, it works well for blob detection.

![]()

Principle Component Analysis (PCA) can be used to determine the orientation of an object in space, i.e. what direction it is pointing. One of Greg’s examples used blog detection and PCA to show the direction of a pen on a table. I thought it might be interesting to apply this code to the image of the blackout to see what would happen.

I ended up tweaking the brightness, contrast, and threshold values quite a bit before I was able to get a result I was happy with. When the brightness and contrast are set too high, it detects the lit area instead of the dark area. Higher threshold values change the location of the blob’s centroid. A few minor changes to these values can also change the perceived orientation of the blob. The tricky part is that I’m not entirely sure what the “right” answer is. I ended up settling on the up orientation (where the heaviest part of the blob is considered the “top”), since it is closest to the north/south orientation of a map. It is tilted in space a bit as a result of the way the photo was taken, and ideally this would probably have made more sense with a photo taken directly from above. Either way, the result is interesting to look at.

The code is below. Additional components on Github.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 | //Kim Ash //Makematics - Fall 2012 //pca_nyc - takes map of NYC blackout and analyzes orientation of unlit area import Jama.Matrix; import pca_transform.*; import hypermedia.video.*; PCA pca; PVector axis1; PVector axis2; PVector mean; OpenCV opencv; PImage img; int imgWidth = 1200/4; int imgHeight = 800/4; void setup() { size(1200, 800); opencv = new OpenCV(this); opencv.loadImage("data/NYCsandy.jpg", imgWidth, imgHeight); noLoop(); } Matrix toMatrix(PImage img) { ArrayList<PVector> points = new ArrayList<PVector>(); for (int x = 0; x < img.width; x++) { for (int y = 0; y < img.height; y++) { int i = y*img.width + x; if (brightness(img.pixels[i]) == 0) { points.add(new PVector(x, y)); } } } println("nBlackPixels: " + points.size() + "/" + img.width*img.height); Matrix result = new Matrix(points.size(), 2); for (int i = 0; i < points.size(); i++) { result.set(i, 0, points.get(i).x); result.set(i, 1, points.get(i).y); } return result; } int currX = 0; int currY = 0; void imageInGrid(PImage img, String message) { image(img, currX, currY); fill(255, 0, 0); text(message, currX + 5, currY + imgHeight - 5); currX += img.width; if (currX > width - img.width) { currX = 0; currY += img.height; } } void draw() { //background(255); opencv.convert(GRAY); imageInGrid(opencv.image(), "GRAY"); opencv.brightness(53); imageInGrid(opencv.image(), "BRIGHTNESS: 53"); opencv.contrast(90); imageInGrid(opencv.image(), "CONTRAST: 90"); opencv.threshold(115); imageInGrid(opencv.image(), "THRESHOLD: 115"); Matrix m = toMatrix(opencv.image()); pca = new PCA(m); Matrix eigenVectors = pca.getEigenvectorsMatrix(); eigenVectors.print(10, 2); axis1 = new PVector(); axis2 = new PVector(); axis1.x = (float)eigenVectors.get(0, 0); axis1.y = (float)eigenVectors.get(1, 0); axis2.x = (float)eigenVectors.get(0, 1); axis2.y = (float)eigenVectors.get(1, 1); axis1.mult((float)pca.getEigenvalue(0)); axis2.mult((float)pca.getEigenvalue(1)); image(opencv.image(), 0, opencv.image().height, opencv.image().width*3, opencv.image().height*3); Blob[] blobs = opencv.blobs(10, imgWidth*imgHeight/2, 100, true, OpenCV.MAX_VERTICES*4 ); noFill(); stroke(200); pushMatrix(); translate(0, imgHeight); scale(3, 3); for ( int i=0; i<blobs.length; i++ ) { beginShape(); for ( int j=0; j<blobs[i].points.length; j++ ) { vertex( blobs[i].points[j].x, blobs[i].points[j].y ); } endShape(CLOSE); } PVector centroid = new PVector(blobs[0].centroid.x, blobs[0].centroid.y); translate(centroid.x, centroid.y); stroke(0, 255, 0); line(0, 0, axis1.x, axis1.y); stroke(255, 0, 0); line(0, 0, axis2.x, axis2.y); popMatrix(); fill(255,0,0); text("PCA Object Axes:\nFirst two principle components centered at blob centroid", 10, height - 20); } void draw(Matrix m) { double[][] a = m.getArray(); for (int i = 0; i < m.getRowDimension(); i++) { ellipse((float)a[i][0], (float)a[i][1], 3, 3); } } |